Logging and Monitoring for Amazon API Applications

Plan and implement a log monitoring process and secure your log files.

Overview

It is important to know who is accessing to your network instances, what actions were taken, and which APIs were called. This can be accomplished by having a log monitoring mechanism in place to keep track of network traffic. The monitoring mechanism can constantly check network connections and channels, and send alerts to notify in case of unusual activity.

This whitepaper discusses the importance of logging and monitoring, planning and implementing a log monitoring process/mechanism, and the relevance of securing log files. To identify and prevent possible security threats within the network, developers must control and keep records of the actions taken, user access, and other log information.

Data Protection Policy (DPP) requirements

Logging and Monitoring: Developers must gather logs to detect security-related events affecting their applications and systems including:

- Success or failure of the event

- Date and time

- Access attempts

- Data changes

- System errors

Developers must implement this logging mechanism on all channels (for example, service APIs, storage-layer APIs, and administrative dashboards) to provide access to Information.

Developers must review logs either in real-time (for example, SIEM tool) or on a twice-a-week basis. Logs must not contain Personally identifiable information (PII) unless the PII is necessary to meet legal requirements, including tax or regulatory requirements. Unless otherwise required by applicable law, logs must be retained for at least 90 days for reference in the case of a security incident.

Developers must also build mechanisms to monitor the logs and all system activities that initiate investigative alarms on suspicious actions (for example, multiple unauthorized calls, unexpected request rate and data retrieval volume, and access to canary data records). Developers must implement monitoring alarms and processes to detect if Information is extracted from, or can be found beyond its protected boundaries. Developers should perform investigation when monitoring alarms are initiated, and this should be documented in the Developer's Incident Response Plan.

About logging and monitoring

A log is a record of the events occurring within an organization’s systems and networks. Logs are composed of log entries and each entry contains information related to a specific event that has occurred within a system or network. While logs were originally used for troubleshooting problems, they now can be used for functions within organizations such as optimizing system and network performance, recording the actions of users, and providing data for investigating malicious activity.

Within an organization, it is necessary to have logs containing records related to computer security. Common examples of these computer security logs are audit logs that track user authentication attempts and security device logs that record possible issues. This guide addresses only logs that typically contain computer security-related information.

Need for logging and monitoring

Log management facilitates secure record retention and storage in sufficient detail for a defined period of time. Routine log reviews and analysis are beneficial for identifying security incidents, policy violations, fraudulent activity, and operational problems shortly after they occur. They also provide information useful for resolving such issues.

Logs help with:

- Performing auditing and forensic analysis

- Supporting the organization’s internal investigations

- Establishing baselines

- Identifying operational trends and long-term problems

For example, an intrusion detection system is a primary source of event information after malicious commands, issued to a server from an external host, are recorded. An incident responder then reviews a firewall log, a secondary source of event information, to look for other connection attempts from the same source IP address.

Developers should be particularly cautious about the accuracy of logs from hosts that have been compromised. Log sources that are not properly secured, including insecure transport mechanisms, are susceptible to log configuration changes and log alteration.

Planning and implementation

Organizations are required to log privileged actions by operators on production hosts and all dependencies in systems containing Amazon information.

Developers can find additional details on logging in National Institute of Standards and Technology (NIST) control AU-2: Audit Events. This control ensures that developers define organizationally impactful security events. Although named audit events, this control is focused on logging, as logging is the mechanism by which developers can capture and review (for example, audit) events. Compliance with the Amazon Data Protection Policy (DPP) requires that, at a minimum, the following actions are logged:

- Access and authorization

- Intrusion attempts

- Configuration changes

Furthermore, control AU-3: Content of Audit Records defines the types of content that should be logged. Pay particular attention to the supplemental guidance, as it highlights the necessary fields required by the Amazon DPP.

Regarding additional recommended fields, make a note of the following: time stamps, source and destination addresses, user or process identifiers, event descriptions, success/fail indications, filenames involved, and access control or flow control rules invoked.

Event outcomes can include indicators of event success or failure and event-specific results such as system security and privacy states after the event occurs. Should a security event occur, these fields will help developers appropriately target response efforts to the affected systems.

Developers should answer the following questions for all logs relating to applications and systems that provide access to Amazon information:

- What do the log groups consist of?

- What events and associated metadata do they capture?

- How is the log secured/protected at rest and in transit?

- What is the retention period for the logs?

- How are logs monitored and alarmed?

- Are the wire logs or service logs scrubbed for sensitive content (passwords, credentials, PII)?

- Which logs are utilized and correlated to support a breach notification or incident response?

- Do the logs capture all customer facing actions?

- Do the logs capture all internal (intra-service) and external (inter-service) API calls?

- Do the logs capture audit trails with respect to Amazon customer PII (for example, reads, writes, modify permissions, and deletes)?

Managing logs for critical transactions

For critical transactions involving Amazon information, all Add, Change/Modify, and Delete activities or transactions must generate a log entry. Each log entry should contain the following information:

- User identification information.

- Types of events:

- Account management events

- Process tracking

- System events/errors

- Authentication/authorization checks

- Data deletions, access, changes, and permission changes

- Access attempts to systems that perform audit functions, including those used for log creation, storage, and analysis

- API requests to service endpoints and administrative dashboards

- Intrusion attempts

- Date and time stamp

- Success or failure indication

- Origination of event

- Identity or name of affected data, system component, or resource

Identification

Inadequate log information can negatively impact forensics investigations, preventing engineers from finding the root cause of incidents. Developers should identify all areas in their systems that must be logged. Amazon recommends documenting the parameters, API calls, and syslog captured in these logs.

Security monitoring starts with answering the following questions:

- What parameters should we measure?

- How should we measure them?

- What are the thresholds for these parameters?

- How will escalation processes work?

- Where will logs be stored?

Log file design considerations

Developers should consider the following when designing log files:

- Collection: Note how your log files are collected. Often operating system, application, or third-party/middleware agents collect log information.

- Storage: Centralize log files that generate from multiple instances to help with log retention, analysis, and correlation.

- Transport: Transfer decentralized logs to a central location in a secure, reliable, and timely fashion.

- Taxonomy: Present different categories of log files in a format suitable for analysis.

- Analysis/correlation: Logs provide security intelligence after analyzing and correlating events in them. Analyze logs in real time or at scheduled intervals.

- Protection/security: Log files are sensitive. Utilize network control, identity and access management, encryption, data integrity authentication, and tamper-proof time-stamping to protect your log files.

Security monitoring

Two key components of security and operational best practices are logging and monitoring API calls. Logging and monitoring are also requirements for industry and regulatory compliance. An organization should define its requirements and goals for performing logging and monitoring. The requirements should include all applicable laws, regulations, and existing organizational policies, such as data retention policies.

Security logs for developer systems can have multiple sources as various network components generate log files. These include firewalls, identity providers (IdP), data loss prevention solutions (DLP), audio/visual (AV) systems, the operating system, platforms, and applications.

Many logs are related to security and must be included in the log file strategy. You should exclude logs that are unrelated to security from the security strategy. Logs should include all user activities, exceptions, and security events. Store logs for at least 90 days for future investigations.

Developers using NIST 800-53 can reference control SI-4: System Monitoring. The intent of this control is to ensure that developers can adequately monitor their environments and receive notifications in the case of anomalous activity. Seemingly non-security related metrics, such as unexpected increased cost or increased computing, can indicate a potential undetected security event. Be sure to consider such indicators while defining monitoring metrics. As developers identify new indicators, all monitors for detecting and responding to repeated events should be updated. For guidance on responding, refer to the Incident Response section of the NIST 800-53 document.

Additionally, Developers should pay close attention to their control enhancements, as they can further protect the environment. For example, the SI-4(1) System-Wide Intrusion Detection System generates a notification as soon as an intrusion occurs.

General requirements

- Define steps to access, review, or offload logs associated with every service.

- Do not log any Amazon customer personally identifiable information (PII) such as customer or seller names, address, e-mail address, phone number, gift message content, survey responses, payment details, purchases, cookies, digital fingerprint (for example, browser or user device), IP address, geo-location, or Internet-connected device product identifier.

- Do not turn on debug-level logging for a system that handles production customer data or traffic.

- Logs must be retained for at least 90 days for reference in case of a security incident.

- Review auditable events annually or when there is a significant change.

- Logs should be stored centrally and securely.

- All systems should have the same time and date configuration. Otherwise, if an incident occurs and an engineer runs a traceability test, it will be difficult if each system has a different time configuration.

Monitoring and alarms

In addition to monitoring moving average convergence/divergence (MACD) events, you should monitor software or component failure. Faults can be the result of hardware or software failure and might not be related to a security incident, even though they can have service and data availability implications.

A service failure can also be the result of deliberate malicious activity, such as a denial-of-service attack. In any case, faults should generate alerts, and developers should then use event analysis and correlation techniques to determine the cause of the fault, and examine whether it should initiate a security response.

When designing monitors and alarms:

- Build mechanisms that monitor the logs for all system activities and initiate investigative alarms on suspicious activities.

- Ensure alarming is in place for logs on suspicious events. Examples include multiple unauthorized calls, unexpected request rate and data retrieval volume, and access to canary data records.

- Define and regularly review the processes for every alarm. Examples include ticketing and operator notifications.

Monitoring tied to operations and their corresponding incident response plans should be reviewed on a regular basis. Developers should perform investigations when monitoring alarms are initiated, and these should be documented in the Developer's Incident Response Plan.

Protecting log information

You must protect logging facilities and log information against tampering and unauthorized access. Administrator and operator logs are common targets for erasing activity trails.

Common controls for protecting log information include:

- Verifying that audit trails are enabled and active for system components.

- Ensuring that only individuals who have a job-related need can view audit trail files.

- Ensuring access control mechanisms, physical segregation, and network segregation are in place to prevent unauthorized modifications to audit logs.

- Ensuring that current audit trail files promptly back up to a centralized log server or media that is difficult to alter.

- Verifying that logs for external-facing technologies (for example, wireless, firewalls, DNS, mail) are offloaded or copied onto a secure, centralized internal log server or media.

- Using change detection software or file integrity monitoring that examines system settings, monitored files, and reports the results of the monitoring activities.

- Obtaining and examining security policies and procedures to verify that they include procedures to review security logs at least daily and that follow-up to exceptions is required.

- Verifying that regular log reviews are performed for all system components.

- Ensuring that security policies and procedures include audit log retention policies and require audit log retention for a specified period of time, defined by the business and compliance requirements.

Logging and monitoring best practices

The distributed nature of logs, inconsistent log formats, and log volume all make the management of log generation, storage, and analysis challenging. There are a few key practices that an organization should follow to avoid and solve for these challenges. The following measures give a brief explanation of these solutions:

- Prioritize log management appropriately and define its requirements and goals: Organizations should ensure logging and monitoring mechanisms comply with applicable laws, regulations, contractual requirements, and existing organizational policies.

- Establish policies and procedures: Proper policies and procedures ensure a consistent approach throughout the organization. They also ensure that laws and regulatory requirements are met. Periodic audits, testing, and validation are mechanisms to confirm that logging standards and guidelines are being followed throughout the organization.

- Create and maintain a secure log management infrastructure: It is very helpful for an organization to implement a log management infrastructure and determine how components interact with one another. This aids in preserving the integrity of log data from accidental or intentional modification or deletion, and also in maintaining the confidentiality of log data. It is critical to create an infrastructure robust enough to handle expected volumes of log data, including peak volumes during extreme situations such as a widespread malware incident, penetration testing, and vulnerability scans.

- Provide adequate support and training for all staff with log management responsibilities: Support includes providing log management tools and associated documentation, providing technical guidance on log management activities, and distributing information to log management staff.

The National Institute of Standards and Technology (NIST) places logging controls in the Audit and Accountability (AU) domain and monitoring controls in the System and Information Integrity (SI) domain. Note that in addition to the controls presented in this section, logs must abide by the Access Control, Least Privilege, and Encryption and Storage requirements of the Amazon DPP.

Using Amazon CloudWatch alarms for notifications

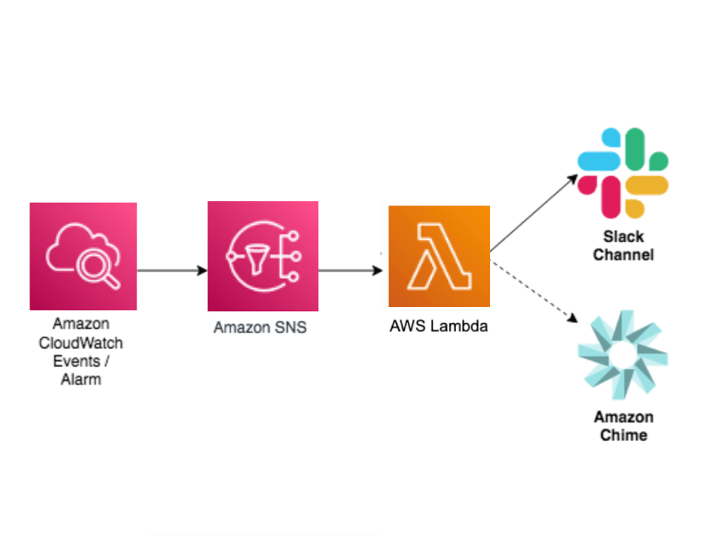

When developing, deploying, and supporting business-critical applications, timely system notifications are crucial to keep services up and running reliably. For example, a team who actively collaborates using Amazon Chime or Slack might want to receive critical system notifications directly within team chat rooms. This is possible using Amazon Simple Notification System (SNS) with AWS Lambda.

Amazon CloudWatch alarms enable setting up metric thresholds and sending alerts to Amazon SNS. Amazon SNS can send notifications using e-mail, HTTP(S) endpoints, and Short Message Service (SMS) messages to mobile phones. Amazon SNS can even initiate a Lambda function.

Amazon SNS doesn’t currently support sending messages directly to Amazon Chime chat rooms, but a Lambda function can be inserted between them. By initiating a Lambda function from Amazon SNS, the event data can be consumed from the CloudWatch alarm and a human friendly message can be crafted before sending it to Amazon Chime or Slack.

The following architectural diagram demonstrates how the various components work together to create this solution.

For more information, refer to the AWS Knowledge Center.

Centralized storing and reporting on AWS

This section discusses the AWS solution for log ingestion, log processing, and log visualization. This centralized logging solution uses Amazon OpenSearch Service which includes a dashboard visualization tool, OpenSearch Dashboards, that helps visualize not only log and trace data, but also machine-learning powered results for anomaly detection and search relevance ranking.

From an operational and security perspective, API call logging provides the data and context required to analyze user behavior and understand certain events. API call and IT resource change logs can also be used to demonstrate that only authorized users have performed a certain task in an environment in alignment with compliance requirements. However, given the volume and variability associated with logs from different systems, it can be challenging in an on-premise environment to gain a clear understanding of the activities users have performed and the changes made to IT resources.

AWS provides the centralized logging solution to enable organizations to collect, analyze, and display Amazon CloudWatch Logs in a single dashboard. This centralized logging solution uses Amazon OpenSearch Service with the dashboard visualization tool OpenSearch Dashboards. This solution provides a single web console to ingest both application logs and AWS service logs in to the Amazon OpenSearch Service domains.

OpenSearch Dashboards helps visualize not only log and trace data, but also machine-learning powered results for anomaly detection and search relevance ranking. In combination with other AWS managed services, this solution provides capabilities of centralized log ingestion across multiple regions and accounts, one-click creation of codeless end-to-end log processors, and templated dashboards for visualization to customers, complementary of Amazon OpenSearch Service.

You can deploy a centralized logging architecture automatically on AWS. For more information, refer to Centralized Logging on AWS. For more information on logging best practices in AWS, refer to the Introduction to AWS Security.

Conclusion

This whitepaper discussed the importance of logging and monitoring. Remember that all traffic on the network should be monitored via logs that contain all the necessary information to back-track actions taken (event status, date and time, access attempts, data changes, and system actions). It is important to plan and implement a log monitoring process/mechanism and log identification parameters to ensure that only authorized users are performing action in compliance with requirements. A Security Information and Event Management (SIEM) tool helps to set application thresholds for usual network traffic and sends an alert in case of a security event. Securing logs by having a centralized log storage location with limited access is a fundamental action that helps ensure that data is not corrupted. By following these steps, network traffic is monitored, and unusual attempts generate alerts to make it easier to react in case of possible security threats within network instances.

Further reading

For additional information, see:

- Data Protection Policy

- Acceptable Use Policy

- Introduction to AWS Security AWS Whitepaper

- NIST 800-53 Rev.5: Security and Privacy Controls for Information Systems and Organizations

Notices

Amazon sellers and developers are responsible for making their own independent assessment of the information in this document. This document: (a) is for informational purposes only, (b) represents current practices, which are subject to change without notice, and (c) does not create any commitments or assurances from Amazon.com Services LLC (Amazon) and its affiliates, suppliers or licensors. Amazon Services API products or services are provided “as is” without warranties, representations, or conditions of any kind, whether express or implied. This document is not part of, nor does it modify, any agreement between Amazon and any party.

© 2025 Amazon.com Services LLC or its affiliates. All rights reserved.

Updated about 1 month ago